Web Scraping using Python

July 26th, 2018

In this tutorial, you'll learn how to extract data from the web, manipulate and clean data using Python's Pandas library, and data visualize using Python's Matplotlib library.

Web scraping is a term used to describe the use of a program or algorithm to extract and process large amounts of data from the web. Whether you are a data scientist, engineer, or anybody who analyzes large amounts of datasets, the ability to scrape data from the web is a useful skill to have. Let's say you find data from the web, and there is no direct way to download it, web scraping using Python is a skill you can use to extract the data into a useful form that can be imported.

In this tutorial, you will learn about the following:

• Data extraction from the web using Python's Beautiful Soup module

• Data manipulation and cleaning using Python's Pandas library

• Data visualization using Python's Matplotlib library

The dataset used in this tutorial was taken from a 10K race that took place in Hillsboro, OR on June 2017. Specifically, you will analyze the performance of the 10K runners and answer questions such as:

• What was the average finish time for the runners?

• Did the runners' finish times follow a normal distribution?

• Were there any performance differences between males and females of various age groups?

Web Scraping using Beautiful Soup

Using Jupyter Notebook, you should start by importing the necessary modules (pandas, numpy, matplotlib.pyplot, seaborn). If you don't have Jupyter Notebook installed, I recommend installing it using the Anaconda Python distribution which is available on the internet. To easily display the plots, make sure to include the line %matplotlib inline as shown below.

To perform web scraping, you should also import the libraries shown below. The urllib.request module is used to open URLs. The Beautiful Soup package is used to extract data from html files. The Beautiful Soup library's name is bs4 which stands for Beautiful Soup, version 4.

After importing necessary modules, you should specify the URL containing the dataset and pass it to urlopen() to get the html of the page.

Getting the html of the page is just the first step. Next step is to create a Beautiful Soup object from the html. This is done by passing the html to the BeautifulSoup() function. The Beautiful Soup package is used to parse the html, that is, take the raw html text and break it into Python objects. The second argument 'lxml' is the html parser whose details you do not need to worry about at this point.

The soup object allows you to extract interesting information about the website you're scraping such as getting the title of the page as shown below.

You can also get the text of the webpage and quickly print it out to check if it is what you expect.

You can view the html of the webpage by right-clicking anywhere on the webpage and selecting "Inspect." This is what the result looks like.

You can use the find_all() method of soup to extract useful html tags within a webpage. Examples of useful tags include < a > for hyperlinks, < table > for tables, < tr > for table rows, < th > for table headers, and < td > for table cells. The code below shows how to extract all the hyperlinks within the webpage.

As you can see from the output above, html tags sometimes come with attributes such as class, src, etc. These attributes provide additional information about html elements. You can use a for loop and the get('"href") method to extract and print out only hyperlinks.

To print out table rows only, pass the 'tr' argument in soup.find_all().

The goal of this tutorial is to take a table from a webpage and convert it into a dataframe for easier manipulation using Python. To get there, you should get all table rows in list form first and then convert that list into a dataframe. Below is a for loop that iterates through table rows and prints out the cells of the rows.

The output above shows that each row is printed with html tags embedded in each row. This is not what you want. You can use remove the html tags using Beautiful Soup or regular expressions.

The easiest way to remove html tags is to use Beautiful Soup, and it takes just one line of code to do this. Pass the string of interest into BeautifulSoup() and use the get_text() method to extract the text without html tags.

Using regular expressions is highly discouraged since it requires several lines of code and one can easily make mistakes. It requires importing the re (for regular expressions) module. The code below shows how to build a regular expression that finds all the characters inside the < td > html tags and replace them with an empty string for each table row. First, you compile a regular expression by passing a string to match to re.compile(). The dot, star, and question mark (.*?) will match an opening angle bracket followed by anything and followed by a closing angle bracket. It matches text in a non-greedy fashion, that is, it matches the shortest possible string. If you omit the question mark, it will match all the text between the first opening angle bracket and the last closing angle bracket. After compiling a regular expression, you can use the re.sub() method to find all the substrings where the regular expression matches and replace them with an empty string. The full code below generates an empty list, extract text in between html tags for each row, and append it to the assigned list.

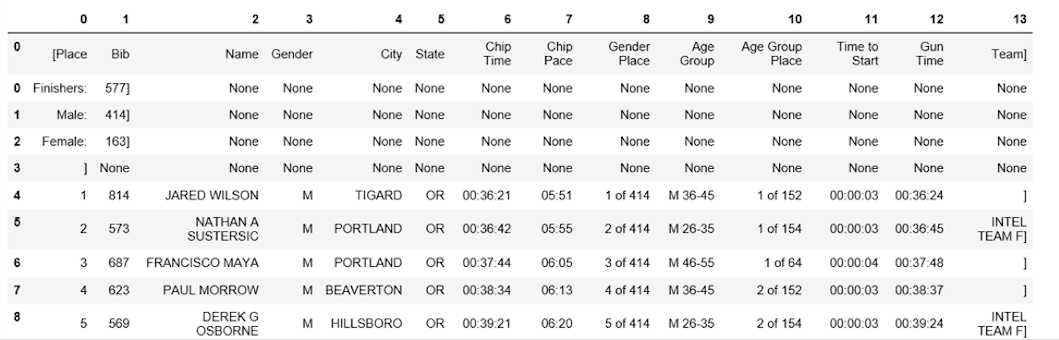

The next step is to convert the list into a dataframe and get a quick view of the first 10 rows using Pandas.

0

0

[Finishers:, 577]

1

[Male:, 414]

2

[Female:, 163]

3

[]

4

[1, 814, JARED WILSON, M, TIGARD, OR, 00:36:21...

5

[2, 573, NATHAN A SUSTERSIC, M, PORTLAND, OR, ...

6

[3, 687, FRANCISCO MAYA, M, PORTLAND, OR, 00:3...

7

[4, 623, PAUL MORROW, M, BEAVERTON, OR, 00:38:...

8

[5, 569, DEREK G OSBORNE, M, HILLSBORO, OR, 00...

9

[6, 642, JONATHON TRAN, M, PORTLAND, OR, 00:39...

Data Manipulation and Cleaning

The dataframe is not in the format we want. To clean it up, you should split the "0" column into multiple columns at the comma position. This is accomplished by using the str.split() method.

This looks much better, but there is still work to do. The dataframe has unwanted square brackets surrounding each row. You can use the strip() method to remove the opening square bracket on column "0."

The table is missing table headers. You can use the find_all() method to get the table headers.

Similar to table rows, you can use Beautiful Soup to extract text in between html tags for table headers.

You can then convert the list of headers into a pandas dataframe.

0

0

[Place, Bib, Name, Gender, City, State, Chip T...

Similarly, you can split column "0" into multiple columns at the comma position for all rows.

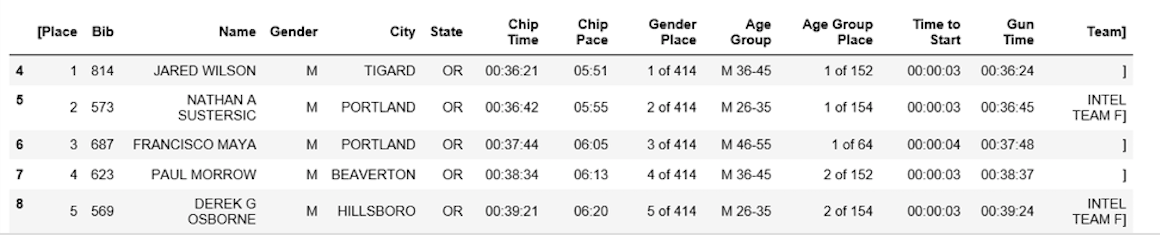

The two dataframes can be concatenated into one using the concat() method as illustrated below.

Below shows how to assign the first row to be the table header.

At this point, the table is almost properly formatted. For analysis, you can start by getting an overview of the data as shown below.

The table has 597 rows and 14 columns. You can drop all rows with any missing values.

Also, notice how the table header is replicated as the first row in df5. It can be dropped using the following line of code.

You can perform more data cleaning by renaming the '[Place' and ' Team]' columns. Python is very picky about space. Make sure you include space after the quotation mark in ' Team]'.

The final data cleaning step involves removing the closing bracket for cells in the "Team" column.

It took a while to get here, but at this point, the dataframe is in the desired format. Now you can move on to the exciting part and start plotting the data and computing interesting statistics.

Data Analysis and Visualization

The first question to answer is, what was the average finish time (in minutes) for the runners? You need to convert the column "Chip Time" into just minutes. One way to do this is to convert the column to a list first for manipulation.

The next step is to convert the list back into a dataframe and make a new column ("Runner_mins") for runner chip times expressed in just minutes.

The code below shows how to calculate statistics for numeric columns only in the dataframe.

Runner_mins

count

577.000000

mean

60.035933

std

11.970623

min

36.350000

25%

51.000000

50%

59.016667

75%

67.266667

max

101.300000

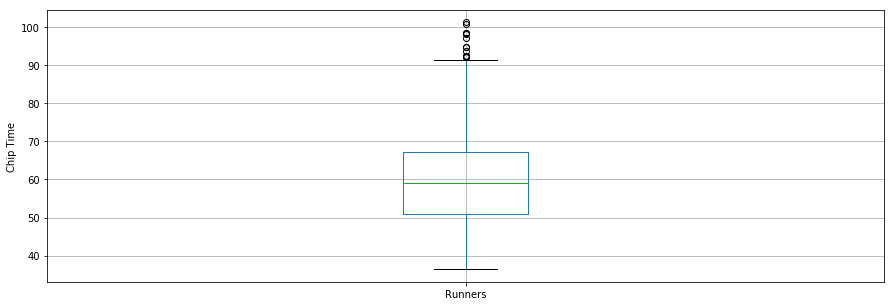

Interestingly, the average chip time for all runners was ~60 mins. The fastest 10K runner finished in 36.35 mins, and the slowest runner finished in 101.30 minutes.

A boxplot is another useful tool to visualize summary statistics (maximum, minimum, medium, first quartile, third quartile, including outliers). Below are data summary statistics for the runners shown in a boxplot. For data visualization, it is convenient to first import parameters from the pylab module that comes with matplotlib and set the same size for all figures to avoid doing it for each figure.

The second question to answer is: Did the runners' finish times follow a normal distribution?

Below is a distribution plot of runners' chip times plotted using the seaborn library. The distribution looks almost normal.

The third question deals with whether there were any performance differences between males and females of various age groups. Below is a distribution plot of chip times for males and females.

The distribution indicates that females were slower than males on average. You can use the groupby() method to compute summary statistics for males and females separately as shown below.

The average chip time for all females and males was ~66 mins and ~58 mins, respectively. Below is a side-by-side boxplot comparison of male and female finish times.

Conclusion

In this tutorial, you performed web scraping using Python. You used the Beautiful Soup library to parse html data and convert it into a form that can be used for analysis. You performed cleaning of the data in Python and created useful plots (box plots, bar plots, and distribution plots) to reveal interesting trends using Python's matplotlib and seaborn libraries. After this tutorial, you should be able to use Python to easily scrape data from the web, apply cleaning techniques and extract useful insights from the data.

Reference : https://www.datacamp.com

Last updated