Stock Market Predictions

2 Jan 2020

Stock Market Predictions with LSTM in Python

Discover Long Short-Term Memory (LSTM) networks in Python and how you can use them to make stock market predictions

In this tutorial, you will see how you can use a time-series model known as Long Short-Term Memory. LSTM models are powerful, especially for retaining a long-term memory, by design, as you will see later. You'll tackle the following topics in this tutorial:

Understand why would you need to be able to predict stock price movements;

Download the data - You will be using stock market data gathered from Yahoo finance;

Split train-test data and also perform some data normalization;

Go over and apply a few averaging techniques that can be used for one-step ahead predictions;

Motivate and briefly discuss an LSTM model as it allows to predict more than one-step ahead;

Predict and visualize future stock market with current data

If you're not familiar with deep learning or neural networks, you should take a look at our Deep Learning in Python course. It covers the basics, as well as how to build a neural network on your own in Keras. This is a different package than TensorFlow, which will be used in this tutorial, but the idea is the same.

Why Do You Need Time Series Models?

You would like to model stock prices correctly, so as a stock buyer you can reasonably decide when to buy stocks and when to sell them to make a profit. This is where time series modelling comes in. You need good machine learning models that can look at the history of a sequence of data and correctly predict what the future elements of the sequence are going to be.

Warning: Stock market prices are highly unpredictable and volatile. This means that there are no consistent patterns in the data that allow you to model stock prices over time near-perfectly. Don't take it from me, take it from Princeton University economist Burton Malkiel, who argues in his 1973 book, "A Random Walk Down Wall Street," that if the market is truly efficient and a share price reflects all factors immediately as soon as they're made public, a blindfolded monkey throwing darts at a newspaper stock listing should do as well as any investment professional.

However, let's not go all the way believing that this is just a stochastic or random process and that there is no hope for machine learning. Let's see if you can at least model the data, so that the predictions you make correlate with the actual behavior of the data. In other words, you don't need the exact stock values of the future, but the stock price movements (that is, if it is going to rise of fall in the near future).

# Make sure that you have all these libaries available to run the code successfully

from pandas_datareader import data

import matplotlib.pyplot as plt

import pandas as pd

import datetime as dt

import urllib.request, json

import os

import numpy as np

import tensorflow as tf # This code has been tested with TensorFlow 1.6

from sklearn.preprocessing import MinMaxScalerDownloading the Data

You will be using data from the following sources:

Alpha Vantage. Before you start, however, you will first need an API key, which you can obtain for free here. After that, you can assign that key to the

api_keyvariable.Use the data from this page. You will need to copy the Stocks folder in the zip file to your project home folder.

Stock prices come in several different flavours. They are,

Open: Opening stock price of the day

Close: Closing stock price of the day

High: Highest stock price of the data

Low: Lowest stock price of the day

Getting Data from Alphavantage

You will first load in the data from Alpha Vantage. Since you're going to make use of the American Airlines Stock market prices to make your predictions, you set the ticker to "AAL". Additionally, you also define a url_string, which will return a JSON file with all the stock market data for American Airlines within the last 20 years, and a file_to_save, which will be the file to which you save the data. You'll use the ticker variable that you defined beforehand to help name this file.

Next, you're going to specify a condition: if you haven't already saved data, you will go ahead and grab the data from the URL that you set in url_string; You'll store the date, low, high, volume, close, open values to a pandas DataFrame df and you'll save it to file_to_save. However, if the data is already there, you'll just load it from the CSV.

Getting Data from Kaggle

Data found on Kaggle is a collection of csv files and you don't have to do any preprocessing, so you can directly load the data into a Pandas DataFrame.

Data Exploration

Here you will print the data you collected in to the DataFrame. You should also make sure that the data is sorted by date, because the order of the data is crucial in time series modelling.

Date

Open

High

Low

Close

0

1970-01-02

0.30627

0.30627

0.30627

0.30627

1

1970-01-05

0.30627

0.31768

0.30627

0.31385

2

1970-01-06

0.31385

0.31385

0.30996

0.30996

3

1970-01-07

0.31385

0.31385

0.31385

0.31385

4

1970-01-08

0.31385

0.31768

0.31385

0.31385

Data Visualization

Now let's see what sort of data you have. You want data with various patterns occurring over time.

This graph already says a lot of things. The specific reason I picked this company over others is that this graph is bursting with different behaviors of stock prices over time. This will make the learning more robust as well as give you a change to test how good the predictions are for a variety of situations.

Another thing to notice is that the values close to 2017 are much higher and fluctuate more than the values close to the 1970s. Therefore you need to make sure that the data behaves in similar value ranges throughout the time frame. You will take care of this during the data normalization phase.

Splitting Data into a Training set and a Test set

You will use the mid price calculated by taking the average of the highest and lowest recorded prices on a day.

Now you can split the training data and test data. The training data will be the first 11,000 data points of the time series and rest will be test data.

Normalizing the Data

Now you need to define a scaler to normalize the data. MinMaxScalar scales all the data to be in the region of 0 and 1. You can also reshape the training and test data to be in the shape [data_size, num_features].

Due to the observation you made earlier, that is, different time periods of data have different value ranges, you normalize the data by splitting the full series into windows. If you don't do this, the earlier data will be close to 0 and will not add much value to the learning process. Here you choose a window size of 2500.

Tip: when choosing the window size make sure it's not too small, because when you perform windowed-normalization, it can introduce a break at the very end of each window, as each window is normalized independently.

In this example, 4 data points will be affected by this. But given you have 11,000 data points, 4 points will not cause any issue

Reshape the data back to the shape of [data_size]

You can now smooth the data using the exponential moving average. This helps you to get rid of the inherent raggedness of the data in stock prices and produce a smoother curve.

Note that you should only smooth training data.

One-Step Ahead Prediction via Averaging

Averaging mechanisms allow you to predict (often one time step ahead) by representing the future stock price as an average of the previously observed stock prices. Doing this for more than one time step can produce quite bad results. You will look at two averaging techniques below; standard averaging and exponential moving average. You will evaluate both qualitatively (visual inspection) and quantitatively (Mean Squared Error) the results produced by the two algorithms.

The Mean Squared Error (MSE) can be calculated by taking the Squared Error between the true value at one step ahead and the predicted value and averaging it over all the predictions.

Standard Average

You can understand the difficulty of this problem by first trying to model this as an average calculation problem. First you will try to predict the future stock market prices (for example, xt+1) as an average of the previously observed stock market prices within a fixed size window (for example, xt-N, ..., xt) (say previous 100 days). Thereafter you will try a bit more fancier "exponential moving average" method and see how well that does. Then you will move on to the "holy-grail" of time-series prediction; Long Short-Term Memory models.

First you will see how normal averaging works. That is you say,

In other words, you say the prediction at t+1t+1 is the average value of all the stock prices you observed within a window of tt to t−Nt−N.

Take a look at the averaged results below. It follows the actual behavior of stock quite closely. Next, you will look at a more accurate one-step prediction method.

So what do the above graphs (and the MSE) say?

It seems that it is not too bad of a model for very short predictions (one day ahead). Given that stock prices don't change from 0 to 100 overnight, this behavior is sensible. Next, you will look at a fancier averaging technique known as exponential moving average.

Exponential Moving Average

You might have seen some articles on the internet using very complex models and predicting almost the exact behavior of the stock market. But beware! These are just optical illusions and not due to learning something useful. You will see below how you can replicate that behavior with a simple averaging method.

In the exponential moving average method, you calculate xt+1xt+1 as,

xt+1 = EMAt = γ × EMAt-1 + (1-γ) xt where EMA0 = 0 and EMA is the exponential moving average value you maintain over time.

The above equation basically calculates the exponential moving average from t+1t+1 time step and uses that as the one step ahead prediction. γγ decides what the contribution of the most recent prediction is to the EMA. For example, a γ=0.1γ=0.1 gets only 10% of the current value into the EMA. Because you take only a very small fraction of the most recent, it allows to preserve much older values you saw very early in the average. See how good this looks when used to predict one-step ahead below.

If Exponential Moving Average is this Good, Why do You Need Better Models?

You see that it fits a perfect line that follows the True distribution (and justified by the very low MSE). Practically speaking, you can't do much with just the stock market value of the next day. Personally what I'd like is not the exact stock market price for the next day, but would the stock market prices go up or down in the next 30 days. Try to do this, and you will expose the incapability of the EMA method.

You will now try to make predictions in windows (say you predict the next 2 days window, instead of just the next day). Then you will realize how wrong EMA can go. Here is an example:

Predict More Than One Step into the Future

To make things concrete, let's assume values, say xt=0.4xt=0.4, EMA=0.5EMA=0.5 and γ=0.5γ=0.5

Say you get the output with the following equation

Xt+1 = EMAt = γ × EMAt-1 + (1 - γ)XtSo you have xt+1=0.5×0.5+(1−0.5)×0.4=0.45xt+1=0.5×0.5+(1−0.5)×0.4=0.45

So xt+1=EMAt=0.45xt+1=EMAt=0.45

So the next prediction xt+2xt+2 becomes,

Xt+2 = γ × EMAt + (1-γ)Xt+1

Which is xt+2=γ×EMAt+(1−γ)EMAt=EMAtxt+2=γ×EMAt+(1−γ)EMAt=EMAt

Or in this example, Xt+2 = Xt+1 = 0.45

So no matter how many steps you predict in to the future, you'll keep getting the same answer for all the future prediction steps.

One solution you have that will output useful information is to look at momentum-based algorithms. They make predictions based on whether the past recent values were going up or going down (not the exact values). For example, they will say the next day price is likely to be lower, if the prices have been dropping for the past days, which sounds reasonable. However, you will use a more complex model: an LSTM model.

These models have taken the realm of time series prediction by storm, because they are so good at modelling time series data. You will see if there actually are patterns hidden in the data that you can exploit.

Introduction to LSTMs: Making Stock Movement Predictions Far into the Future

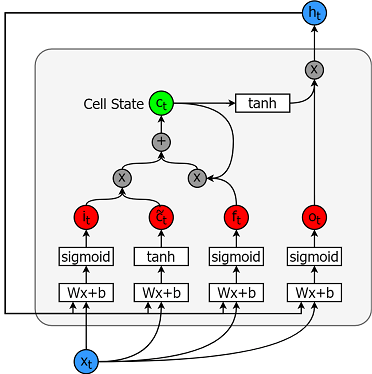

Long Short-Term Memory models are extremely powerful time-series models. They can predict an arbitrary number of steps into the future. An LSTM module (or cell) has 5 essential components which allows it to model both long-term and short-term data.

Cell state (ctct) - This represents the internal memory of the cell which stores both short term memory and long-term memories

Hidden state (htht) - This is output state information calculated w.r.t. current input, previous hidden state and current cell input which you eventually use to predict the future stock market prices. Additionally, the hidden state can decide to only retrive the short or long-term or both types of memory stored in the cell state to make the next prediction.

Input gate (itit) - Decides how much information from current input flows to the cell state

Forget gate (ftft) - Decides how much information from the current input and the previous cell state flows into the current cell state

Output gate (otot) - Decides how much information from the current cell state flows into the hidden state, so that if needed LSTM can only pick the long-term memories or short-term memories and long-term memories

A cell is pictured below.

And the equations for calculating each of these entities are as follows.

$it = \sigma(W{ix}xt + W{ih}h_{t-1}+b_i)$

$\tilde{c}t = \sigma(W{cx}xt + W{ch}h_{t-1} + b_c)$

$ft = \sigma(W{fx}xt + W{fh}h_{t-1}+b_f)$

$c_t = ft c{t-1} + i_t \tilde{c}_t$

$ot = \sigma(W{ox}xt + W{oh}h_{t-1}+b_o)$

ht=ottanh(ct)ht=ottanh(ct)

For a better (more technical) understanding about LSTMs you can refer to this article.

TensorFlow provides a nice sub API (called RNN API) for implementing time series models. You will be using that for your implementations.

Data Generator

You are first going to implement a data generator to train your model. This data generator will have a method called .unroll_batches(...) which will output a set of num_unrollings batches of input data obtained sequentially, where a batch of data is of size [batch_size, 1]. Then each batch of input data will have a corresponding output batch of data.

For example if num_unrollings=3 and batch_size=4 a set of unrolled batches it might look like,

input data: [x0,x10,x20,x30],[x1,x11,x21,x31],[x2,x12,x22,x32][x0,x10,x20,x30],[x1,x11,x21,x31],[x2,x12,x22,x32]

output data: [x1,x11,x21,x31],[x2,x12,x22,x32],[x3,x13,x23,x33][x1,x11,x21,x31],[x2,x12,x22,x32],[x3,x13,x23,x33]

Data Augmentation

Also to make your model robust you will not make the output for $xtalwaysalwaysx{t+1}.Ratheryouwillrandomlysampleanoutputfromtheset.Ratheryouwillrandomlysampleanoutputfromthesetx{t+1},x{t+2},\ldots,x_{t+N}wherewhereN$ is a small window size.

Here you are making the following assumption:

$x{t+1},x{t+2},\ldots,x_{t+N}$ will not be very far from each other

I personally think this is a reasonable assumption for stock movement predictions.

Below you illustrate how a batch of data is created visually.

Defining Hyperparameters

In this section, you'll define several hyperparameters. D is the dimensionality of the input. It's straightforward, as you take the previous stock price as the input and predict the next one, which should be 1.

Then you have num_unrollings, this is a hyperparameter related to the backpropagation through time (BPTT) that is used to optimize the LSTM model. This denotes how many continuous time steps you consider for a single optimization step. You can think of this as, instead of optimizing the model by looking at a single time step, you optimize the network by looking at num_unrollings time steps. The larger the better.

Then you have the batch_size. Batch size is how many data samples you consider in a single time step.

Next you define num_nodes which represents the number of hidden neurons in each cell. You can see that there are three layers of LSTMs in this example.

Defining Inputs and Outputs

Next you define placeholders for training inputs and labels. This is very straightforward as you have a list of input placeholders, where each placeholder contains a single batch of data. And the list has num_unrollings placeholders, that will be used at once for a single optimization step.

Defining Parameters of the LSTM and Regression layer

You will have a three layers of LSTMs and a linear regression layer, denoted by w and b, that takes the output of the last Long Short-Term Memory cell and output the prediction for the next time step. You can use the MultiRNNCell in TensorFlow to encapsulate the three LSTMCell objects you created. Additionally, you can have the dropout implemented LSTM cells, as they improve performance and reduce overfitting.

Calculating LSTM output and Feeding it to the regression layer to get final prediction

In this section, you first create TensorFlow variables (c and h) that will hold the cell state and the hidden state of the Long Short-Term Memory cell. Then you transform the list of train_inputs to have a shape of [num_unrollings, batch_size, D], this is needed for calculating the outputs with the tf.nn.dynamic_rnn function. You then calculate the LSTM outputs with the tf.nn.dynamic_rnn function and split the output back to a list of num_unrolling tensors. the loss between the predictions and true stock prices.

Loss Calculation and Optimizer

Now, you'll calculate the loss. However, you should note that there is a unique characteristic when calculating the loss. For each batch of predictions and true outputs, you calculate the Mean Squared Error. And you sum (not average) all these mean squared losses together. Finally, you define the optimizer you're going to use to optimize the neural network. In this case, you can use Adam, which is a very recent and well-performing optimizer.

Prediction Related Calculations

Here you define the prediction related TensorFlow operations. First, define a placeholder for feeding in the input (sample_inputs), then similar to the training stage, you define state variables for prediction (sample_c and sample_h). Finally you calculate the prediction with the tf.nn.dynamic_rnn function and then sending the output through the regression layer (w and b). You also should define the reset_sample_state operation, which resets the cell state and the hidden state. You should execute this operation at the start, every time you make a sequence of predictions.

Running the LSTM

Here you will train and predict stock price movements for several epochs and see whether the predictions get better or worse over time. You follow the following procedure.

Define a test set of starting points (

test_points_seq) on the time series to evaluate the model onFor each epoch

For full sequence length of training data

Unroll a set of

num_unrollingsbatchesTrain the neural network with the unrolled batches

Calculate the average training loss

For each starting point in the test set

Update the LSTM state by iterating through the previous

num_unrollingsdata points found before the test pointMake predictions for

n_predict_oncesteps continuously, using the previous prediction as the current inputCalculate the MSE loss between the

n_predict_oncepoints predicted and the true stock prices at those time stamps

Visualizing the Predictions

You can see how the MSE loss is going down with the amount of training. This is good sign that the model is learning something useful. To quantify your findings, you can compare the network's MSE loss to the MSE loss you obtained when doing the standard averaging (0.004). You can see that the LSTM is doing better than the standard averaging. And you know that standard averaging (though not perfect) followed the true stock prices movements reasonably.

Though not perfect, LSTMs seem to be able to predict stock price behavior correctly most of the time. Note that you are making predictions roughly in the range of 0 and 1.0 (that is, not the true stock prices). This is okay, because you're predicting the stock price movement, not the prices themselves.

Final Remarks

I'm hoping that you found this tutorial useful. I should mention that this was a rewarding experience for me. In this tutorial, I learnt how difficult it can be to device a model that is able to correctly predict stock price movements. You started with a motivation for why you need to model stock prices. This was followed by an explanation and code for downloading data. Then you looked at two averaging techniques that allow you to make predictions one step into the future. You next saw that these methods are futile when you need to predict more than one step into the future. Thereafter you discussed how you can use LSTMs to make predictions many steps into the future. Finally you visualized the results and saw that your model (though not perfect) is quite good at correctly predicting stock price movements.

If you would like to learn more about deep learning, be sure to take a look at our Deep Learning in Python course. It covers the basics, as well as how to build a neural network on your own in Keras. This is a different package than TensorFlow, which will be used in this tutorial, but the idea is the same.

Here, I'm stating several takeaways of this tutorial.

Stock price/movement prediction is an extremely difficult task. Personally I don't think any of the stock prediction models out there shouldn't be taken for granted and blindly rely on them. However models might be able to predict stock price movement correctly most of the time, but not always.

Do not be fooled by articles out there that shows predictions curves that perfectly overlaps the true stock prices. This can be replicated with a simple averaging technique and in practice it's useless. A more sensible thing to do is predicting the stock price movements.

The model's hyperparameters are extremely sensitive to the results you obtain. So a very good thing to do would be to run some hyperparameter optimization technique (for example, Grid search / Random search) on the hyperparameters. Below I listed some of the most critical hyperparameters

The learning rate of the optimizer

Number of layers and the number of hidden units in each layer

The optimizer. I found Adam to perform the best

Type of the model. You can try GRU/ Standard LSTM/ LSTM with Peepholes and evaluation performance difference

In this tutorial you did something faulty (due to the small size of data)! That is you used the test loss to decay the learning rate. This indirectly leaks information about test set into the training procedure. A better way of handling this is to have a separate validation set (apart from the test set) and decay learning rate with respect to performance of the validation set.

If you'd like to get in touch with me, you can drop me an e-mail at thushv@gmail.com or connect with me via LinkedIn.

References

I referred to this repository to get an understanding about how to use LSTMs for stock predictions. But details can be vastly different from the implementation found in the reference.

ที่มาบทความ https://www.datacamp.com/community/tutorials/lstm-python-stock-market

Last updated